With Microsoft //Build right around the corner there is little wonder about what will be the major theme of the show… AI. But if you’ve been paying attention you know the cat is already out of the bag! Local AI APIs are available for Windows developers today and implementing them is easy!

There is already a lot of content on how to get started and more details about building with the WCR:

- Official docs: https://learn.microsoft.com/en-us/windows/ai/apis/

- Official Samples: https://github.com/microsoft/WindowsAppSDK-Samples/tree/main/Samples/WindowsCopilotRuntime

- Tamás Deme: Getting started with NPU workloads

- Lance McCarthy: https://dvlup.com/blog/2025.5.17-add-copilot-to-your-app-in-10m/

- Thomas Claudius Huber: https://www.thomasclaudiushuber.com/2025/05/03/use-windows-ai-in-wpf/

TL;DR – You need to be on Windows Insider on a Copilot+ PC to use these APIs today (May 18th). There are LLMs, Super Resolution, OCR, Image segmentation, and Object removal APIs.

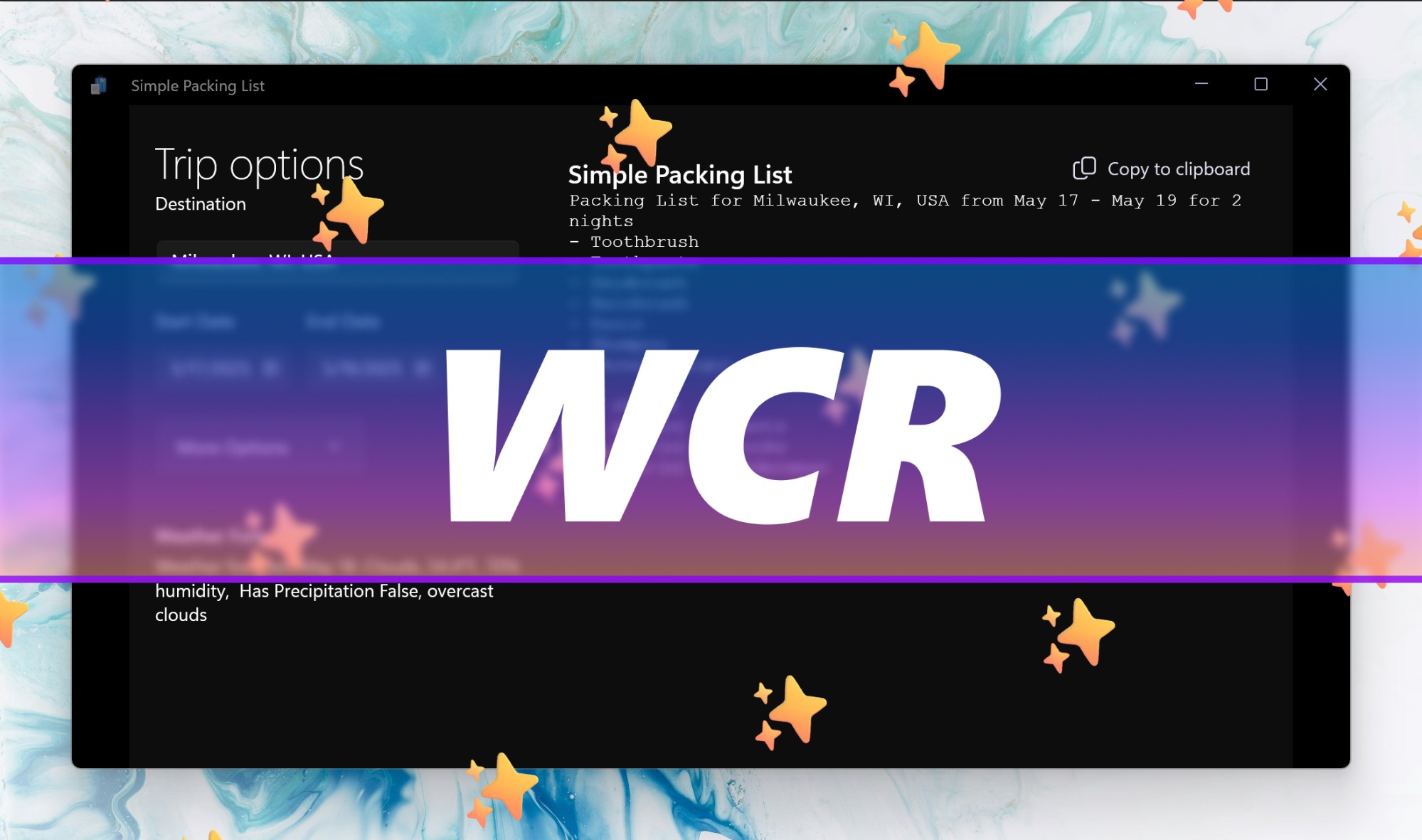

To learn while doing I made a basic app to help make a packing list: https://github.com/TheJoeFin/SimplePackingList and here are the questions I had before building the app and what I learned along the way.

- Can you submit an app using these APIs to the Microsoft Store?

- No, experimental SDKs are no accepted (as of May 18th, 2025)

- Is it easy to detect if the APIs are on a supported PC?

- No, but you can copy code from samples and it isn’t too bad

- Can you build and code the app on a device which doesn’t meet the requirements?

- Yes, but if you try to call the local models you’ll get a runtime error

- Always check to make sure the device has the capability first.

- Can the models be used with older Windows frameworks?

- Yes, Microsoft and Thomas have examples of how that works.

- Is it easy to work with the APIs?

- Yes, but I did have to copy over lots of helper code from the Microsoft samples to really get it working.

- You have to get images into the exact right format to work

- Unsurprisingly, all work with the models needs to be async

- Is the LLM fast?

- Yes when streaming, not so much when awaiting a response.

- Factor this into your design

- Does the LLM take up a sizable chunk of RAM when using it?

- Yes, I will need to do more testing and figure out how that exactly works, how long it is held on to and if it is shared.

- Is the OCR good?

- Yes! Much better than the models which shipped via WinRT in Windows 10.

- But it is slower than the Windows 10 API… trade offs!

- Are the Image Segmentation and extraction APIs good?

- They are fine, but less than pixel accuracy from what I can tell

- Do you have to manage the models?

- No

- Are the APIs integrating the app with Copilot or the Copilot app?

- Not not today (May 2025)

If you have any other questions about coding with the local models via the Windows Copilot Runtime feel free to reach out and I’ll do my best to answer and update the list above!

Joe